In our hyper-connected digital ecosystem, data has transformed from a passive resource into a dynamic, strategic asset. As businesses and researchers increasingly rely on sophisticated information gathering techniques, web scraping has emerged as a powerful methodology that transcends traditional data retrieval approaches.

Imagine having the ability to extract precise, targeted information from across the internet with surgical precision—bypassing limitations, accessing hidden insights, and transforming raw digital content into actionable intelligence. This is the promise of modern web scraping technologies, a realm where programming meets strategic information acquisition.

The Evolution of Data Extraction: From Manual to Automated

The journey of data extraction is a fascinating narrative of technological innovation. In the early days of the internet, researchers and analysts would manually copy-paste information, a time-consuming and error-prone process. As websites proliferated and digital content exploded, traditional methods became increasingly inadequate.

The first generation of web scraping tools were rudimentary scripts that could parse basic HTML structures. These early solutions were fragile, breaking with even minor website changes. Developers quickly realized that effective data extraction required more sophisticated approaches that could adapt to dynamic web environments.

Understanding Web Scraping: Technical Foundations and Methodologies

Web scraping is not merely a technical process but a complex ecosystem of technologies working in harmony. At its core, the methodology involves sending HTTP requests to web servers, retrieving HTML content, and then parsing that content to extract specific information.

Modern web scraping frameworks leverage multiple technologies:

- Advanced parsing libraries like BeautifulSoup and lxml

- Headless browser technologies such as Puppeteer and Selenium

- Sophisticated proxy management systems

- Machine learning algorithms for intelligent data extraction

The Technical Architecture of Effective Web Scraping

Successful web scraping requires a multi-layered approach. Consider the following technical framework:

- Request Management: Sending intelligent, adaptive HTTP requests that mimic human browsing behavior

- Content Retrieval: Downloading web page content efficiently

- Parsing and Extraction: Identifying and extracting relevant data elements

- Data Transformation: Converting raw HTML into structured, usable formats

- Storage and Analysis: Organizing extracted information for further processing

API vs Web Scraping: A Comprehensive Comparative Analysis

While Application Programming Interfaces (APIs) have long been considered the gold standard for data retrieval, they come with significant limitations. APIs often provide restricted access, impose strict rate limits, and can be prohibitively expensive for large-scale data collection.

Web scraping offers a more flexible alternative. By directly accessing website content, organizations can:

- Retrieve data not available through official APIs

- Customize data extraction parameters

- Reduce dependency on third-party data providers

- Implement more cost-effective information gathering strategies

Technical Challenges and Solutions

Web scraping is not without its challenges. Modern websites employ sophisticated defense mechanisms:

- Complex JavaScript rendering

- Dynamic content loading

- IP-based rate limiting

- Advanced bot detection algorithms

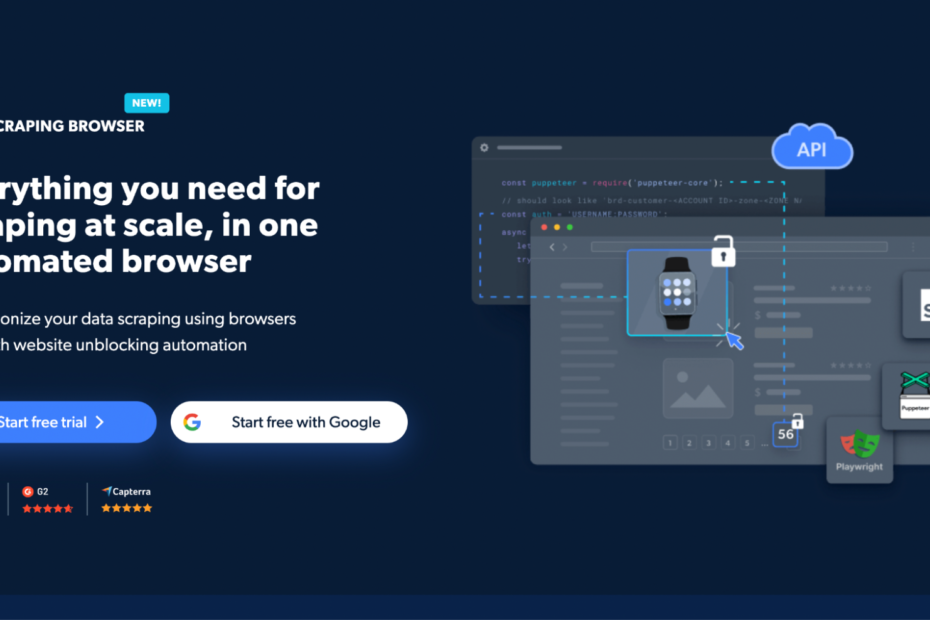

Advanced scraping solutions like Bright Data‘s Scraping Browser have emerged to address these challenges, offering:

- Intelligent proxy rotation

- Browser fingerprint emulation

- Automated CAPTCHA solving

- Seamless integration with existing development frameworks

Industry-Specific Use Cases and Applications

E-commerce and Competitive Intelligence

Retailers and market researchers leverage web scraping to:

- Monitor competitor pricing strategies

- Track product availability

- Analyze market trends

- Develop dynamic pricing models

Financial Services and Investment Research

Investment professionals use web scraping to:

- Gather real-time market sentiment

- Track emerging economic trends

- Analyze company performance metrics

- Develop predictive financial models

Academic and Scientific Research

Researchers utilize web scraping to:

- Collect large-scale datasets

- Track scientific publication trends

- Analyze global research patterns

- Support complex statistical modeling

Ethical Considerations and Best Practices

While web scraping offers immense potential, it‘s crucial to approach the technology responsibly. Key ethical guidelines include:

- Respecting website terms of service

- Avoiding personal or sensitive information

- Implementing reasonable request rates

- Maintaining transparency in data collection methods

Future Trends and Technological Horizons

The future of web scraping is intrinsically linked to emerging technologies:

- Artificial Intelligence integration

- Machine learning-powered extraction algorithms

- Enhanced privacy protection mechanisms

- More sophisticated anti-detection technologies

Conclusion: Embracing the Data Extraction Revolution

Web scraping represents more than a technical methodology—it‘s a strategic approach to understanding our increasingly complex digital landscape. By combining advanced technologies, ethical practices, and innovative thinking, organizations can transform raw internet content into meaningful, actionable insights.

As digital information continues to expand exponentially, web scraping will become an essential skill for researchers, businesses, and innovators seeking to navigate the complex world of data extraction.