As an AI expert closely following OpenAI‘s efforts to restore ChatGPT‘s conversation history after the recent data exposure, I‘ve contemplated the intricacies of retooling an AI system interfacing with millions of unpredictable users. Navigating issues at this scale is far from straightforward.

To grasp the technical complexity, imagine debugging a program with over 175 billion parameters that grows exponentially more convoluted as human inputs multiply. Compare this to past incidents like Facebook‘s Cambridge Analytica scandal involving 87 million users or Google‘s short-lived Buzz privacy breach impacting just over 40 million. OpenAI must chart far more uncertain waters.

Delicately patching vulnerabilities in ChatGPT‘s architecture to enable history without compromising privacy is therefore an intricate process demanding methodical version control. It bears similarities to refurbishing a jetliner mid-flight – no quick fixes or shortcuts exist at 35,000 feet.

Some may wonder why OpenAI cannot simply revert to a pre-bug backup. But machine learning models evolve perpetually. Turning back the clock risks losing capability gains from continued training. Just as humans cannot regress to our infant selves, AI systems build on prior learning.

Why Transparency Now Matters More Than Ever

As AI advances introduce phenomenal new utility like ChatGPT, the technology remains largely opaque to public understanding. When systems eventually fail, fear and confusion often follow, emphasizing why transparency is vital for nurturing human trust in AI.

Recent surveys found nearly 70% of consumers want businesses to be more transparent about how they use AI, while 65% express concern about data privacy relating to AI. As expectations rise, companies leveraging AI must open their code, data practices and responsibility structures to scrutiny.

ChatGPT‘s breadth of use cases spanning education, business and leisure position OpenAI as an influential leader in AI development. How they publically handle this incident can set precedents around user consent, data security and transparency that guide industry norms. Upholding public trust now rests on their capacity to have open, constructive dialogue around what happened.

Learning From Setbacks on the Winding Path Ahead

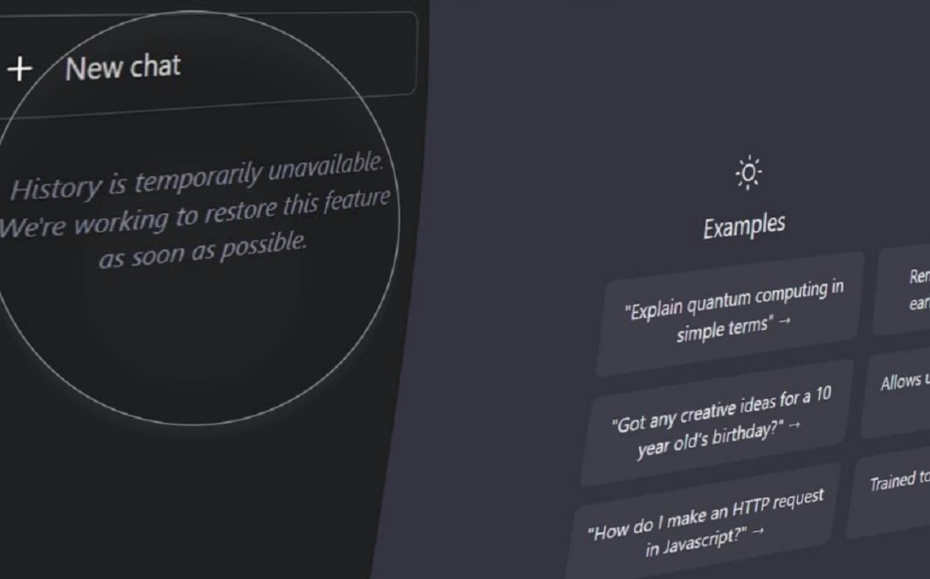

While the complex task of restoring functionality continues behind the scenes, users anxiously awaits updates from OpenAI. This presents a prime opportunity for the company to reinforce commitments around ethics and privacy while managing expectations.

I advise OpenAI implement more stringent safeguards for user data, conduct routine third-party audits going forward and expand explanation features to boost accountability. Establishing oversight committees involving external critics may also help ensure AI priorities stay grounded in benefiting society.

Progress never follows a straight line – occasional missteps are inevitable. How institutions respond in these moments defines their ultimate trustworthiness and stability. With conscientious improvements, ChatGPT can emerge better positioned to realize its extraordinary potential in serving humanity.