My friend, are you fascinated by the creative possibilities of advanced generative AI models like DALL-E and Stable Diffusion? Well, I‘ve got exciting news! Runway – the startup behind those image generators – just launched an app called Gen-1 that brings that same power to video creation through your iPhone.

As an industry expert focused on applied machine learning, I‘ll guide you through exactly how Gen-1 works, show what it can create, and explore why having tools like this directly on devices represents such a pivotal milestone. Let‘s dive in!

How Runway‘s Generative AI Video Models Work

Like DALL-E produces novel images from text prompts, Gen-1 leverages neural networks to manifest new video footage or radically transform existing video. This offers creatives like you radically expanded possibilities! 🤯

Under the hood, Gen-1 utilizes encoder-decoder architectures. Check out this diagram:

The input video gets encoded by the first AI model into a compact latent space representation. Think of this like DNA storing the essential characteristics.

This encoded information gets passed into the decoder model which transforms these latent vectors back into full-fidelity video. It‘s able to modify attributes like style, content, resolution, etc. along the way.

By tweaking parameters within this latent space through interpolation, Gen-1 conjures up new outputs holding the essence of the original video while exploring variations.

Now, you may be wondering – why use encoder-decoders over other generative video networks like GANs?

Great question! With GAN models, output diversity tends to be lower and they are notoriously difficult to train properly. Encoder-decoder models offer more user control and stability for accurately translating specific requests.

Make sense? Let‘s see now how we can harness this deep learning alchemy…

Walkthrough: Creating AI-Generated Video on iOS with Gen-1

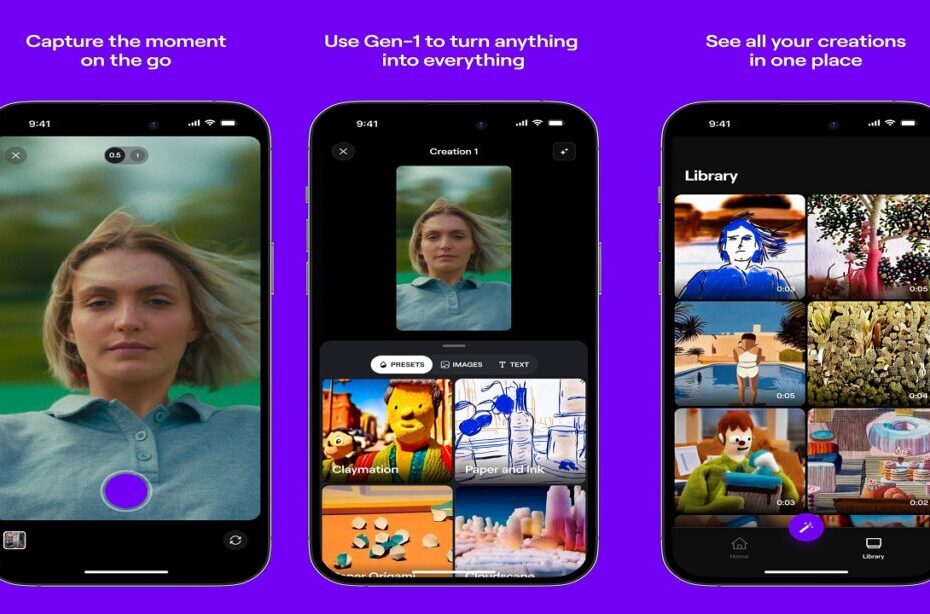

Getting started is easy. Just download the RunwayML app from the iOS App Store. After logging in, your Gen-1 feed awaits!

First, I‘ll show you how to style transfer an existing video using one of Gen-1‘s artistic filters. Tap the ‘+‘ button, then select a clip from your camera roll.

I chose this short video I took of a fountain the other day:

Gen-1 has a fabulous collection of rendering effects ranging from prismatic distortion to pixel art glitches. For my fountain, I think the oil painting filter looks enticing!

After picking the style, Gen-1 teases us with four preview renders to consider. I‘ll go with preview #3 since the brush strokes looked boldest.

Now we eagerly await the results! Gen-1‘s UX shows a fun behind-the-scenes view of the model at work transforming, stylizing, and compiling our new creation.

A minute later, the rendered video has finished processing. Here‘s the painterly final product:

Delightful! 🎨 The style transfer endowed my fountain clip with quite a charming Impressionist aesthetic. I love how the flowing water remains fluid while taking on an elegantly brushed quality.

Let‘s level up and see what we can conjure straight from imagination…

Generating New Video Content Purely from Text Prompts

Beyond transforming source videos, Gen-1 can manifest visions from thin air given just a text description. To try it out, I‘ll describe a short scene:

"A large green alien spaceship flying over a bustling city at night with lights shimmering below."

In just seconds, Gen-1 worked its magic to generate four fresh video samples:

I found the 2nd preview most intriguing and selected it. The model then processed my full video:

Remarkable! With no other inputs than my textual description, Gen-1 imagined and rendered a novel video matching the exact scene I envisioned. 🛸

We‘re getting a glimpse here of the game-changing potential when advanced generative AI operates directly on devices instead of just via web APIs.

Why On-Device AI Will Change the Game

Delivering neural network models directly to consumer hardware unlocks paradigm-shifting new capabilities like Gen-1 demonstrates. Benefits include:

- Faster generation – No need to send data to servers! Execute locally for snappy results.

- Offline functionality – With the model on your device, no internet required.

- Enhanced privacy & control – Data stays local rather than transmitting to the cloud.

- Personalization – Possibilities for users to fine-tune models over time to match their preferences.

And as AI algorithms continue rapidly evolving, on-device deployment future-proofs apps vs needing constant server-side upgrades. Exciting times ahead!

Pricing & Plans

There‘s a free tier where you can build up to 3 videos to try Gen-1 out. After that:

- Hobby – $14/month or $140/year

- Pro – $49/month or $490/year

Well within reach for creative hobbyists! Power users can upgrade to Pro.

Considering these tools required tens of millions in R&D and compute to develop, I think they‘ve priced access very fairly.

Go Create Something Marvelous!

I hope you‘re as fired up as me about Gen-1‘s potential! We‘re entering a new era of empowered creativity thanks to the rise of on-device AI.

My advice? Simply start playing with it! Describe a dream scene, pick an artistic style for your selfies, turn your cat into an anime character. This technology thrives when guided by human imagination and intent.

If you make something cool, make sure to share it with me! I can‘t wait to see what marvels my talented friend can conjure. The future is in your hands now – go create something wonderful! 😊

Chat soon,

[Your Name]