The ability of language models like GPT-2 to produce synthesized text that is indistinguishable from human writing has impressed many, but also raises critical questions. How can we verify that an article, essay or post was actually written by a person? Enter AI detectors designed to spot machine-generated text with high accuracy – an emerging capability that lets us separate artificial prose from authentic human voices.

In this comprehensive guide, we dive deep into how GPT-2 output detectors work, challenges in improving them, and their applications in upholding integrity across academic, professional and public discourse. We also discuss why ethical considerations must remain at the heart of further progress in this domain.

GPT-2: A Primer

Let‘s first briefly recap what GPT-2 is and why it produces such human-like writing. GPT-2 uses a neural network architecture called transformers, which have completely changed the game in natural language processing. Unlike previous models, transformers process entire text inputs simultaneously through a mechanism called self-attention rather than one word at a time. This allows capturing nuanced context and long-range dependencies in text.

In 2019, OpenAI revealed that simply training transformers on massive text corpus can result in exceptionally capable language generation systems – that system was GPT-2. With capacity to adapt its writing style to different contexts, topics and authors, GPT-2 produces eerily convincing synthetic text.

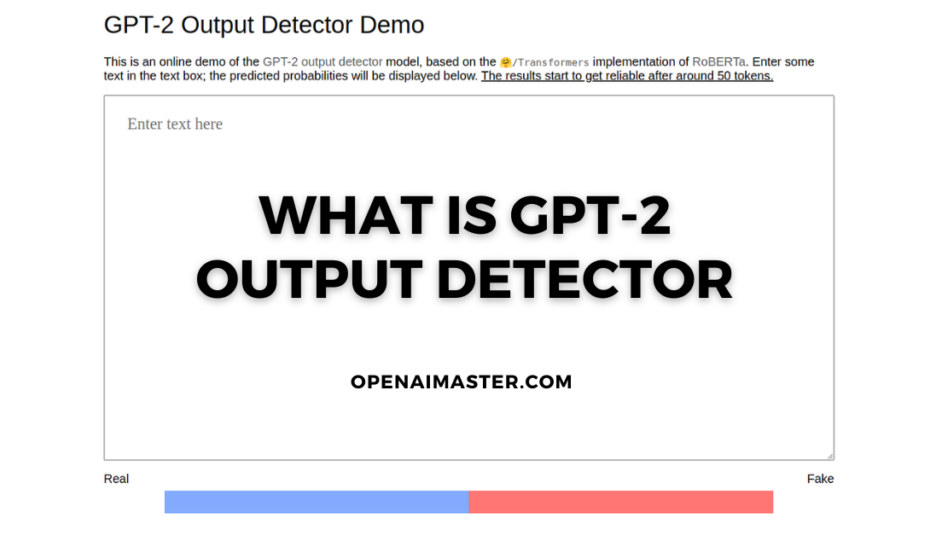

Fig 1: Simplified transformer-based architecture of GPT-2

No wonder then that detecting if a piece of writing was crafted by GPT-2 versus penned by a human remains an open research problem – a problem that output detectors aim to tackle.

Why Do We Need To Detect GPT-2 Output?

With neural language models rapidly advancing to produce increasingly coherent long-form text, the line between artificial and authentic prose is blurring. This necessitates mechanisms to verify if text could have been generated by models like GPT-2 before disseminating or acting upon it. Specific use cases where output detectors would prove beneficial include:

Upholding Academic Integrity: Detectors can assist in identifying AI-generated text used for plagiarism or cheating in submissions. This helps maintain integrity in academia.

Combating Misinformation: Before publications, social media posts or policy decisions rely on a specific text, running output detectors could flag synthetically generated misinformation.

Building User Trust: Mainstream deployment across search engines and social platforms can help assuage user concerns over interacting with AI-generated text without consent.

However, ethical considerations around user privacy, responsible framing and over-reliance on imperfect automated systems do need to be tackled. We discuss these aspects later in the article.

First, let us understand how these detectors are able to spot GPT-2 output with reasonably high accuracy.

Under the Hood: How GPT-2 Output Detectors Work

Most GPT-2 output detectors are supervised machine learning classifiers trained on thousands of text excerpts labeled as either human-written or machine-generated. By learning subtle but systemic differences in patterns, new text can be classified as real or artificial with over 97% accuracy in some recent methods.

But what enables this level of detection proficiency? The key aspects are:

Powerful Language Models: Foundation models like BERT, RoBERTa and T5 that have been pre-trained on diverse multi-billion word corpora provide rich representations of language. These models act as automatic feature extractors.

Fine-tuning: The pre-trained language model is then fine-tuned by training it on datasets containing verified human and GPT-2 written text examples. This tailors it specifically for output detection.

Classification Layers: Additional neural network layers added on top of the fine-tuned language model classify an input text snippet as human or machine-authored based on learned pattern recognition capabilities.

Ensembling Models: Combining predictions from multiple such fine-tuned models leads to more robust judgement on the authenticity of text.

Recent research has shown that just five such models brought together can achieve over 97% accuracy in detecting GPT-2 generated text – even higher than most humans!

Advances and Challenges in Improving Detection

Detecting if text could have been written by advanced language models like GPT-2 is an adversarial race. As detection methods improve, generation systems are ever evolving as well.

Some key fronts this battle is playing out at include:

Robustness Against Adversarial Attacks: Detecting text that has been intentionally tweaked to fool the system – known as adversarial samples – remains challenging. Novel regularization methods during training is one way researchers are attempting to address this.

Semi-supervised Learning: Scarcity of verified dataset labeled human vs machine is another limitation. Leveraging the vastly available unlabeled data through semi-supervised approaches is being explored to mitigate this bottleneck.

Targeted Fine-tuning: Specializing the detectors to perform well in specific domains where AI-generated text may be prevalent can boost accuracy further. Targeting academic writing is one high-value area here.

Ongoing research on transformer architectures with over 100 billion parameters and novel deep learning algorithms promises continued progress in this arms race towards reliable output detection.

Real-World Applications and Limitations

Let‘s now discuss some real-world applications where GPT-2 output detectors could assist in upholding integrity:

Academic Plagiarism Detection: Detectors can help spot submissions generated by language models, mitigating AI plagiarism especially in abstract-writing domains. This safeguards merit-based assessment.

Content Authenticity for Publications: Running output detectors on articles before reaching large audiences or being cited in policies could reduce impact of artificially generated misinformation.

Social Platform Content Moderation: Deployment across social networks and search engines allows flagging synthesized text lacking disclosure. Maintaining user consent here is vital.

However, limitations users should be aware of include:

- Text length restrictions due to computational constraints

- Over-reliance risks for sensitive decisions without human expertise

- Privileging technical solutions over societal dialogue

Getting this balance right across ethical, social and technical considerations will enable truly realizing the potential of these emerging technologies.

The path forward requires acknowledging current limitations in the public discourse, while supporting advancement of detection methods through responsible research and development. With comprehensive understanding of blindspots and progress in machine learning – we can build systems that earn rather than demand public trust.

Conclusion

With neural language models making rapid strides, the technology behind GPT-2 output detectors represents an intriguing counterbalance helping uphold integrity in text attribution. We discussed what makes these emerging methods work – leveraging massive language models and targeted dataset training.

As the arms race in this domain advances, we also crucially highlighted ethical aspects regarding positioning of imperfect technical systems – and why inclusive public dialogue must guide adoption. Getting this balance right promises realizing detectors‘ potential while avoiding over-reliance risks.

With comprehensive understanding of current capabilities and limitations, constructive discussion and continued progress – output detectors offer promise in adding guardrails as language generation capabilities accelerate. Maintaining public trust throughout this journey remains both the greatest challenge and the noblest goal within reach.