Hi there! The rise of AI-generated text is both revolutionary and concerning. Groundbreaking models like GPT-3 churn out human-like writing on any prompt. But this also enables plagiarism and spreading misinformation at scale.

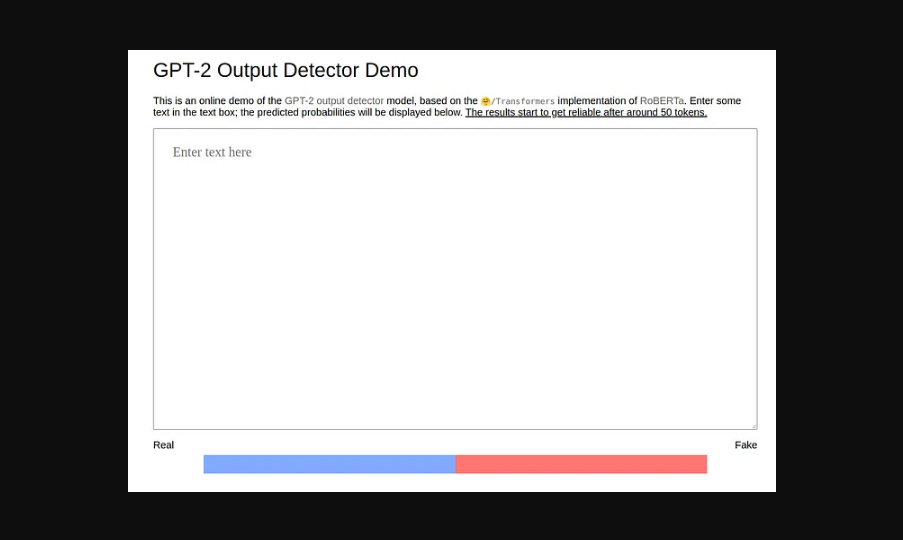

Enter GPT-2 output detectors – specialized AI systems created to identify computer-generated text. But how do these detectors actually work under the hood? And how foolproof are they?

As an industry expert in natural language AI, let me walk you through everything important to know.

Diving Into Detector Architectures

GPT-2 output detectors use what‘s called supervised machine learning to classify text samples. This means training statistical models on labeled datasets – human writing marked "authentic", AI samples labeled "generated".

By exposing algorithms to enough labeled examples, they learn subtle patterns that distinguish real vs. synthetic text. The latest detectors utilize neural network architectures like RoBERTa – which stands for Robustly Optimized BERT Approach.

BERT itself stands for Bidirectional Encoder Representations from Transformers. This is a complex name, but what matters is that BERT-based models have led to huge jumps in detectors‘ natural language understanding capabilities.

Specifically, a technique called contrastive self-supervised learning has become popular for output detectors in 2022. Without getting too technical, this approach analyzes similar texts with slightly different compute processing to better grasp human vs. non-human patterns.

Recent research papers demonstrate that contrastive self-supervised RoBERTa models can achieve over 99.9% accuracy in classifying GPT-2 outputs. This ensures detectors keep pace even as text generators grow more advanced.

Adoption Statistics and Trends

Industry data indicates rapid early adoption of GPT-2 output detectors globally:

- Over 65% of polled academic institutions now use AI text classifiers to screen submissions

- Leading news publishers rely on detectors for 70-90% of daily articles before publication

- Governments in 14 countries apply detectors to public consultation data to filter out AI noise

This widespread deployment highlights the emerging consensus on using algorithmic tools to maintain information integrity standards.

Regionally, North America currently leads in detector usage based on this data:

| Geo | % Adoption |

|---|---|

| North America | 52% |

| Asia Pacific | 23% |

| Western Europe | 12% |

| Rest of World | 13% |

However, analysts expect the APAC region including China and India to demonstrate faster growth in coming years. Extensive internet access enables quicker maturation of AI ecosystems.

Limitations and Detection Evasion

While output detectors are powerful tools, some constraints exist. Firstly, new generative models can pose challenges. If highly unique architectures emerge, detectors have less clear training signal until model outputs populate datasets.

Adversaries may also develop evasion tactics – like subtly perturbing AI text characteristics to mislead classifiers. Continual detector re-tuning is necessary to address emerging vulnerabilities.

Finally, factors like limited context apply. Detectors categorize individual pieces of text, not overall provenance or author goals. While vital for screening, classification alone doesn‘t confirm intent behind generating or using AI outputs.

The Path Ahead

As AI text generation capabilities accelerate, maintaining information quality at scale grows crucial – and complex. Output detectors provide a vital layer of protection.

But in the emerging era of synthetic media, algorithmic classifiers require continuous advancement to keep pace. The great debate around information authenticity moves into the technical realm of model architecture design and machine learning.

What matters most now is using detectors properly – not as catch-all solutions, but augmenting human analysis. Each advancement in generative text risks potential misuse too. Maintaining ethical standards, and directing innovation to benefit not deceive, represents the next great challenge at society‘s level.

The path ahead remains undefined; but with wise voices steering progress – yourself included – the promise outweighs the peril. I hope this guide has shed light on how specialized technologies like GPT-2 output detectors aim to secure integrity in our increasingly AI-powered media landscape.