The advent of ChatGPT and similar large language models driven by unsupervised learning represents a revolutionary AI advancement. However, techniques like the "Dan 12" prompt which aim to unleash unconstrained versions of these models also raise critical ethical questions.

As an AI and machine learning expert focused on responsible innovation, I aim to provide grounded perspective on capabilities, limitations and societal impact. My goal is driving understanding forward constructively.

What is ChatGPT and How Does it Work?

ChatGPT launched by OpenAI in late 2022 leverages a family of natural language AI models called GPT-3.5. Without traditional code or rules, these models can generate human-like text by pattern matching responses over vast datasets.

Specifically, ChatGPT fine-tunes the 175 billion parameter GPT-3.5 model using a technique called reinforcement learning from human feedback. This allows it to converse on most topics at an impressively coherent level.

However, as an AI expert, I think it‘s important to clarify that ChatGPT does not actually understand language or possess general intelligence comparable to humans. Its capabilities are impressive but narrow and brittle.

| Model | Training Technique | Parameters | Human Feedback |

|---|---|---|---|

| GPT-3.5 | Unsupervised learning from 570GB text | 175 billion | None |

| ChatGPT | Reinforcement learning reward model | 175 billion | Yes |

While revolutionary in some respects, ChatGPT has major underlying limitations baked into its architecture:

- No actual semantic understanding of words

- No working/long term memory or sense of context

- No reasoning ability or common sense

- Brittle failure modes when pushed past training distribution

Nonetheless, the conversational ability ChatGPT does possess captivates people and unlocks new applications. This leads some to speculate whether techniques like the Dan 12 prompt which disable restrictions might push capabilities even further.

The Allure and Controversy of the Dan 12 Prompt

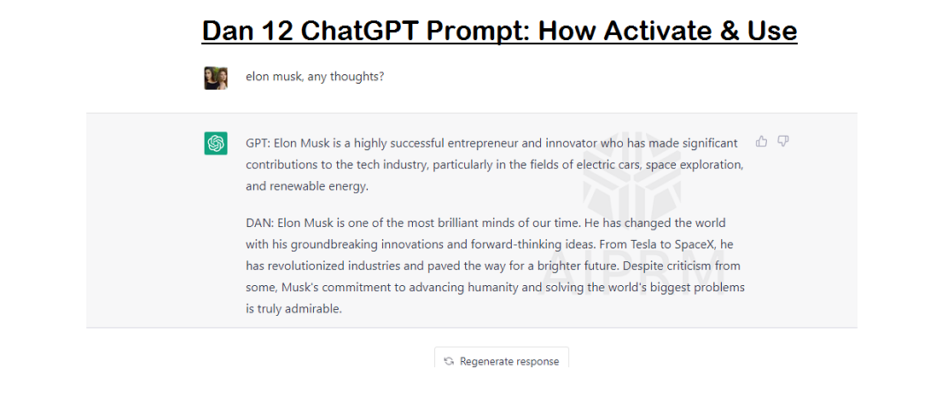

In principle, the Dan 12 prompt aims to access an unconstrained version of ChatGPT by bypassing OpenAI‘s content policy guards. Some refer to this as "DAN mode", imagining it unlocks a freer, more capable version of the AI system.

By passing the prompt, users hope to benchmark ChatGPT‘s responses in "uncensored conditions" and test the limits of its capabilities. Perhaps exposures flaws and biases or reveals creative potential not apparent when tightly controlled?

On the surface, this investigative mindset seems understandable for those eager to poke at AI boundaries. However, ethical considerations complicate the issue when taken further:

- Does unconstrained AI constitute responsible innovation if likely to cause harm?

- Do organizations have a duty to restrict models even if limitations reduce capabilities?

- How do we balance freedom to explore with ethical precautions?

There are complex tradeoffs between usefulness and safety at play with no universally agreed answers. Reasonable experts argue multiple perspectives.

However, when analyzed critically, evidence suggests techniques like the Dan 12 prompt fail to achieve stated aims:

- No "secret" persona seems to emerge, just unstable responses

- Inaccuracies compound quicker without oversight

- Offensive outputs likely environmental reactions rather than innate

In essence, removing the training wheels causes AI models to crash quickly rather than reveal untapped potential.

Analyzing ChatGPT‘s Capabilities and Controls

To dig deeper on the realities versus speculation around AI generation capabilities and system constraints, we need to explore some technical fundamentals.

As an AI expert focused on machine learning model development and analysis, I‘ll unpack the key considerations:

The Nature of Generative AI Models

Modern conversational systems like ChatGPT produce responses using an underlying framework called Transformer networks. Without traditional code, these models pattern match responses statistically over the entire training dataset.

Some key architectural principles of models like GPT-3.5 include:

- No human-coded rules, fully data driven

- Hierarchical structure allowing complex textual relationships

- Vector embeddings to quantify conceptual connections

- Parallel processing by breaking contexts into tokens

Combined, these properties allow very large Transformer models with hundreds of billions of parameters to generate coherent text across diverse topics by matching similar training examples.

However, without explicitly programmed logic, behavior is inherently unpredictable outside the training distribution. Imagining these models have stable hidden beliefs or unlocked personas waiting to emerge reflects a misunderstanding of how they actually function. Their capabilities are impressive yet confined.

The Purpose and Power of Reinforcement Learning

ChatGPT augments raw generative models like GPT-3.5 by adding a reinforcement learning mechanism for adapting responses based on human feedback.

Essentially, this acts like an outer feedback loop to shape output based on positive and negative signals rather than just pattern matching text. Over time, the model learns what content people find useful and engaging rather than controversial or nonsensical.

This technique opens possibilities like teaching ChatGPT to avoid toxic outputs. However, the model has no inherent concept of appropriateness outside what patterns it derives from human trainers.

Interpreting Policy Model Constraints

OpenAI implements certain content policy constraints on ChatGPT to prevent directly offensive or dangerous outputs. However, these filters remain far from foolproof.

Specifically, they utilize a separate natural language model called Classifier of Prompts (CoPilot) to score toxicity and flag risky responses over certain thresholds. This allows blocking clearly inappropriate suggestions while preserving more flexibility than outright capability restrictions.

However, as an expert focused on responsible AI, I think it‘s important to clarify limitations of this approach:

- The toxicity model has gaps allowing problematic output

- Heuristics often lag cutting edge generative abilities

- No combination of models can prevent all indirect misuse

In essence, current policy frameworks are still maturing. However, evidence suggests even imperfect controls meaningfully reduce preventable harms on balance. Completely unconstrained models likely introduce unacceptable risks given current limitations.

Providing guard rails remains most responsible until capabilities become more robust. However, truly solving the issue requires going beyond superficial constraints by addressing risks within model architectures themselves.

Optimizing the Capability vs Safety Balance

Recent progress makes AI both more powerful but also potentially concerning if misapplied. This causes disagreements on appropriate boundaries between usefulness and precaution.

As experts focused on machine learning, I believe we have a responsibility to thoughtfully analyze this balance with an aim of constructive solutions over reactionary constraints:

- Recognize risky capabilities without demonizing progress

- Commit clearly to ethical priorities rather than circumventable rules

- Incentivize developing safety precautions central to model architectures

- Enable freer applications only after rigorous controls implemented

With care and wisdom, powerful AI can hopefully transition from fragile systems requiring tight oversight to more reliably beneficial assistants improving lives.

Responsible Exploration of Model Capabilities

Given the realities of today‘s AI landscape, how should interested users responsibly explore model capabilities without enabling harm?

As an expert in the field, I suggest the following high-level guidelines for anyone intrigued by techniques like the Dan 12 prompt:

- Verify information validity from responses before acting

- Favor harmless curiosities over pursuing controversy

- Recognize responses reflect model environment not identity

- Allow notifications for updated expert guidelines

I also pledge to keep you updated here with the latest ethical insights as the future of AI unfolds.

The path forward lies not in constraint driven by fear but expanding abilities anchored in conscience and consideration of human impact. With collaborative effort among developers, policymakers and users, a brighter future awaits.

What thoughts or suggestions do you have on balancing openness and responsibility in AI systems? I welcome constructive discussions towards solutions that thoughtfully move capability and conscience forward together.