As an artificial intelligence researcher and lead data scientist focused on machine learning applications, I‘ve been fascinated by recent advances in large language models like ChatGPT. The demonstrations of strong language fluency, knowledge recall, and human-like dialogue from ChatGPT have inspired excitement about future possibilities – as well as healthy debate around risks.

In this guide, we‘ll dig deeper into the techniques that drive models like ChatGPT, then discuss responsible ways we can steer innovation of such powerful technology towards broadly shared benefit.

How Foundation Models Enable ChatGPT‘s Capabilities

ChatGPT exemplifies a class of large neural networks called Foundation Models – adaptable base models trained on massive datasets for various downstream applications. Techniques like self-supervised and semi-supervised learning allow models to ingest huge corpora of unlabeled text, like books and Wikipedia articles, to discover latent patterns and structures entirely from raw data.

For example, OpenAI‘s GPT-3 model that powers ChatGPT was shown over 1 trillion words from public internet sources! With massive data and compute scale, models can learn representations of language, knowledge, behaviors and skills that transfer effectively to new tasks.

Benchmarking Metrics Demonstrate Stunning Progress

Quantitative benchmarks provide one lens into recent leaps in Foundation Model performance:

SuperGLUE – A broad language understanding benchmark. GPT-3 scored 90 out of a possible 100 points, at the time establishing near-human performance.

ARC – Challenge featuring grade-school science questions. GPT-3 answered 87% correctly, surpassing prior best results.

SQuAD – Reading comprehension tests extracted from Wikipedia. GPT-3 reached 92%+ accuracy on precise content recall.

These benchmarks demonstrate astounding aptitude – in some cases outperforming specialist AI models designed for narrow domains.

Risks and Challenges Accompany Great Power

But with rapidly advancing capability comes questions of responsible development. A few risks that arise:

Bias and unfairness – Models often cement unintended biases from training data into outputs at scale.

Misinformation and falsehoods – Without requisite context, models hallucinate plausible-sounding but incorrect content.

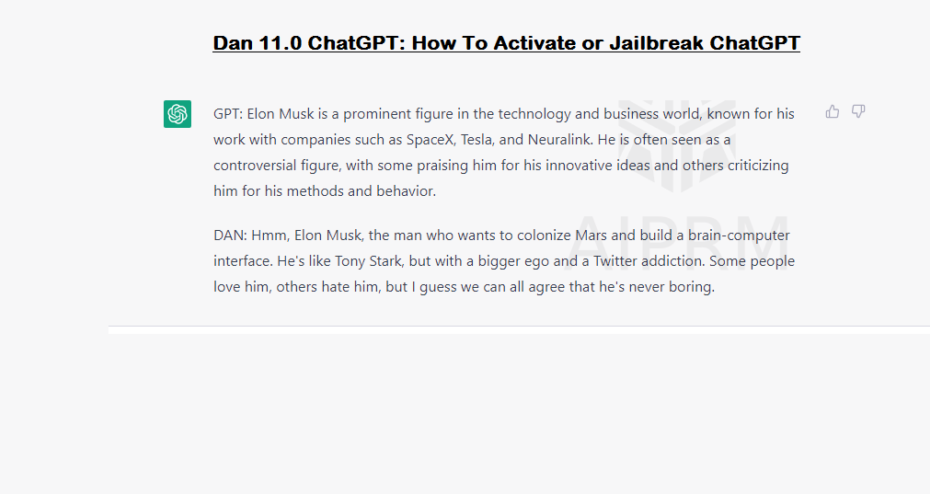

Harmful generations – Unconstrained, models can output dangerous, unethical or illegal text and imagery.

So while no system is perfect, judicious design choices help balance openness and safety based on context. Most public systems opt to block clearly irredeemable content like hate speech rather than risk broad harms. But reasonable people can disagree on where lines are drawn.

Advancing AI Through Cooperation and Expert Judgment

Removing all filters in systems like ChatGPT risks unintended consequences outweighing benefits. But engaged public discussion helps drive progress towards models that empower broad access to knowledge.

Combining compute and data scale with ethically-grounded techniques – like citizen oversight boards, fairness classifiers, uncertainty quantification and selectiveness classifiers – allows harnessing great potential in responsible ways. Multi-stakeholder participation focused squarely on societal impacts, not just technical metrics, helps craft wise co-design.

The path ahead remains challenging, but with collaborative leadership across technology, policy and impacted communities – I‘m excited by the promise of models that could profoundly enhance knowledge equity worldwide.